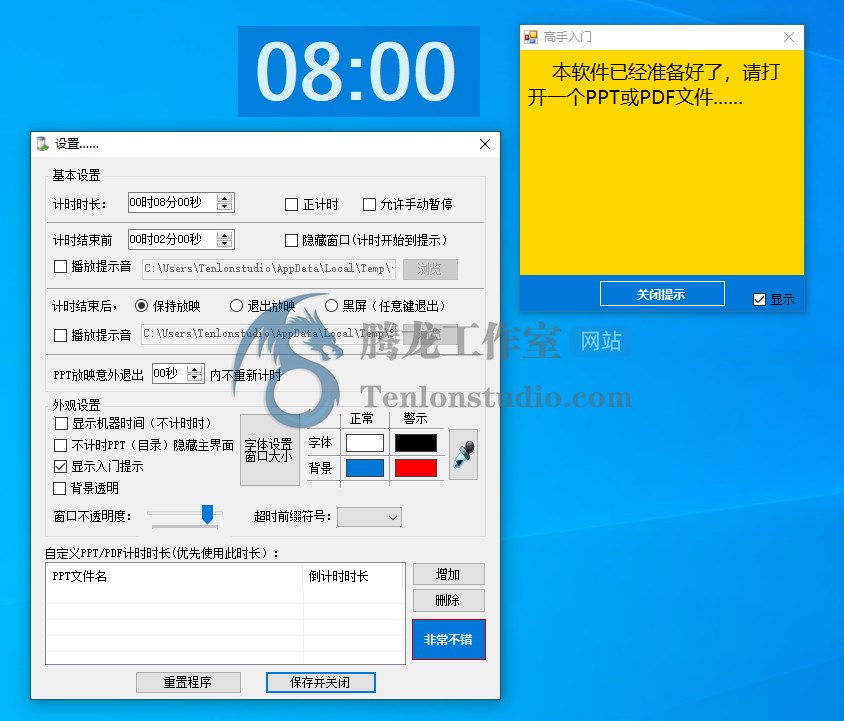

先上图看下效果

下载链接:https://github.com/Amd794/kanleying, 配合这个 https://www.52pojie.cn/thread-953874-1-1.html 使用时时监测漫画的更新动态, 你懂我意思吧!

注意事项:

- 运行前要安装下环境 打开终端运行下pip install -r requirements.txt, 或者安装在虚拟环境.

- 如果要下载完后压缩备份, 需要改下下WinRAR.exe的路径, 例如: D:/Sorfwares/WinRAR/WinRAR.exe.

- [Python] 纯文本查看 复制代码 @staticmethod

def download_images(images_dict):

images_url = images_dict.get(\’images_url\’)

a_title = images_dict.get(\’a_title\’).strip(\’ \’)

comic_title = images_dict.get(\’comic_title\’).strip(\’ \’)

print(f\’开始下载{comic_title}-{a_title}\\n\’)

file_path = f\’./{os.path.basename(__file__).strip(".py")}/{comic_title}/{a_title}/\’

if not os.path.exists(file_path):

os.makedirs(file_path)

download(images_url, file_path, 10)

suffix = [\’jpg\’, \’png\’, \’gif\’, \’jpeg\’]

file_list = sorted(

[file_path + str(imgFileName) for imgFileName in os.listdir(file_path) if

imgFileName.endswith(tuple(suffix))],

key=lambda x: int(os.path.basename(x).split(\’.\’)[0])

)

file_name = f\'{file_path}/{comic_title}-{a_title}\’print(f\’开始生成html{comic_title}-{a_title}\\n\’)

Comic.render_to_html(f\'{file_name}.html\’, a_title,

[str(x) + \’.jpg\’ for x in range(len(file_list))])print(f\’开始生成PDF{comic_title}-{a_title}\\n\’)

Comic.make_pdf(f\'{file_name}.pdf\’, file_list)comment = {

\’Website\’: \’https://amd794.com\’,

\’Password_1\’: \’百度云\’,

\’Password_2\’: f\'{comic_title}-{a_title}\’,

}

with open(f\'{file_path}/Password.txt\’, \’w\’, encoding=\’utf-8\’) as f:

f.write(str(comment))

print(f\’开始压缩文件{comic_title}-{a_title}\\n\’)

Comic.compress(f\'{file_name}.rar\’, f\'{file_path}/*\’, f\'{comic_title}-{a_title}\’) - 看个人需要把相应的代码注释掉, 比如我不用生成个pdf活着压缩文件什么的.

双击如图的文件运行, 输入漫画地址, 例如: https://m.happymh.com/manga/wuliandianfeng

输入0是下载全部章节, >5是下载6往后的所有章节, 直接回车是下载最后一章节, 或者下载某几章节

支持的漫画网站有如下等, 你有好的网站请务必告诉我.抹掉的网址就当个小彩蛋,细心的同志就能找到.

try_to_fix.py

[Python] 纯文本查看 复制代码# !/usr/bin/python3

# -*- coding: utf-8 -*-

# Time : 2020/3/30 23:49

# Author : Amd794

# Email : [url=mailto:2952277346@qq.com]2952277346@qq.com[/url]

# Github : https://github.com/Amd794

import os

import platform as pf

import subprocess

import requests

from PIL import Image, ImageFont, ImageDraw

requests.packages.urllib3.disable_warnings()

def create_img(text, img_save_path):

font_size = 24

liens = text.split(\’\\n\’)

im = Image.new("RGB", (len(text) * 12, len(liens) * (font_size + 5)), \’#fff\’)

dr = ImageDraw.Draw(im)

font_path = r"C:\\Windows\\Fonts\\STKAITI.TTF"

font = ImageFont.truetype(font_path, font_size)

dr.text((0, 0), text, font=font, fill="blue")

im.save(img_save_path)

def make_pdf(pdf_name, file_list):

im_list = []

im1 = Image.open(file_list[0])

file_list.pop(0)

for i in file_list:

img = Image.open(i)

if img.mode == "RGBA":

img = img.convert(\’RGB\’)

im_list.append(img)

else:

im_list.append(img)

im1.save(pdf_name, "PDF", resolution=100.0, save_all=True, append_images=im_list)

def try_download_error_img(error_file):

fail_url = []

with open(error_file) as f:

images_url = f.read().split(\’\\n\’)

images_url = [i for i in images_url if i != \’\’]

for image_url in images_url:

image_url, name = image_url.split(\’ \’)

response = requests.get(image_url, verify=False)

with open(name, \’wb\’) as f:

if response:

f.write(response.content)

print(f\’成功{image_url}-{name}\’)

else:

f.close()

fail_url.append(image_url + \’ \’ + name + \’\\n\’)

print(f\’失败{image_url}-{name}\’)

create_img(f\’温馨提示{image_url}-{name}已经失效\’, name)

if fail_url:

with open(error_file, \’w\’) as f:

f.write(\’\’.join(fail_url))

else:

print(\’全部完成\’)

os.remove(error_file)

def compress(target, source, pwd=\’\’, delete_source=False, ):

"""

压缩加密,并删除原数据

window系统调用rar程序

linux等其他系统调用内置命令 zip -P123 tar source

默认不删除原文件

"""

if pwd: pwd = \’-p\’ + pwd

if pf.system() == "Windows":

cmd = f\’rar a {pwd} {target} {source} -x*.rar -x*.py\’

p = subprocess.Popen(cmd, executable=r\’D:/Sorfwares/WinRAR/WinRAR.exe\’)

p.wait()

else:

cmd = f\’zip a {pwd} {target} {source} -x*.rar -x*.py\’

p = subprocess.Popen(cmd)

p.wait()

# os.system(" ".join(cmd))

if delete_source:

os.remove(source)

if __name__ == \’__main__\’:

error_file = \’error_urls.txt\’

file_name = \’\’

for imgFileName in os.listdir(\’.\’):

if imgFileName.endswith(\’pdf\’):

file_name = (os.path.splitext(imgFileName)[0])

print(f\’当前正常尝试修复—->{file_name}\’)

try_download_error_img(error_file)

suffix = [\’jpg\’, \’png\’, \’gif\’, \’jpeg\’]

file_list = [\’./\’ + str(imgFileName) for imgFileName in os.listdir(\’.\’) if imgFileName.endswith(tuple(suffix))]

file_list.sort(key=lambda x: int(os.path.basename(x).split(\’.\’)[0]))

# print(file_list)

make_pdf(file_name + \’-重构\’ + \’.pdf\’, file_list)

os.remove(file_name + \’.pdf\’)

print(file_name + \’-重构\’ + \’.pdf\’ + \’—->successful\’)

compress(f\'{file_name}.rar\’, \’*\’, file_name)

kanleying.py

[Python] 纯文本查看 复制代码# !/usr/bin/python3

# -*- coding: utf-8 -*-

# Time : 2020/3/24 22:37

# Author : Amd794

# Email : [url=mailto:2952277346@qq.com]2952277346@qq.com[/url]

# Github : https://github.com/Amd794

import os

from pprint import pprint

import pyquery

from PIL import Image, ImageFile

ImageFile.LOAD_TRUNCATED_IMAGES = True

from threading_download_images import get_response, download

import re

import subprocess

import platform as pf

class Util(object):

@staticmethod

def make_pdf(pdf_name, file_list):

im_list = []

im1 = Image.open(file_list[0])

file_list.pop(0)

for i in file_list:

img = Image.open(i)

if img.mode == "RGBA":

img = img.convert(\’RGB\’)

im_list.append(img)

else:

im_list.append(img)

im1.save(pdf_name, "PDF", resolution=100.0, save_all=True, append_images=im_list)

@staticmethod

def render_to_html(html, title, imgs: list):

chapter_num = len(Comic.detail_dicts)

template_path = \’template.html\’

imgs_html = \’\’

lis_html = \’\’

for img in imgs:

imgs_html += f\'<img src="{img}" alt="" style="width: 100%">\\n\’

for li in range(chapter_num):

lis_html += f\’\’\'<li><a href="../{Comic.detail_dicts[li].get("a_title")}/{Comic.detail_dicts[li].get(

"comic_title")}-{Comic.detail_dicts[li].get("a_title")}.html" rel="nofollow">{Comic.detail_dicts[

li].get("a_title")}</a></li>\\n\’\’\’

with open(template_path, \’r\’, encoding=\’utf-8\’) as r:

render_data = re.sub(\'{{title}}\’, title, r.read())

render_data = re.sub(\'{{imgs}}\’, imgs_html, render_data)

render_data = re.sub(\'{{lis_html}}\’, lis_html, render_data)

render_data = re.sub(\'{{chapter_num}}\’, str(chapter_num), render_data)

with open(html, \’w\’, encoding=\’utf-8\’) as w:

w.write(render_data)

@staticmethod

def compress(target, source, pwd=\’\’, delete_source=False, ):

"""

压缩加密,并删除原数据

window系统调用rar程序

linux等其他系统调用内置命令 zip -P123 tar source

默认不删除原文件

"""

if pwd: pwd = \’-p\’ + pwd

if pf.system() == "Windows":

cmd = f\’rar a {pwd} {target} {source} -zcomment.txt\’

p = subprocess.Popen(cmd, executable=r\’D:/Sorfwares/WinRAR/WinRAR.exe\’)

p.wait()

else:

cmd = f\’zip a {pwd} {target} {source}\’

p = subprocess.Popen(cmd)

p.wait()

# os.system(" ".join(cmd))

if delete_source:

os.remove(source)

class Comic(Util):

kanleying_type = {

\’detail_lis\’: \’#chapterlistload li\’,

\’comic_title\’: \’.banner_detail .info h1\’,

\’comic_pages\’: \’div.comicpage div\’,

}

mm820_type = {

\’detail_lis\’: \’.chapter-list li\’,

\’comic_title\’: \’.title-warper h1\’,

\’comic_pages\’: \’div.comiclist div\’,

}

rules_dict = {

# https://www.mh1234.com/

\’mh1234\’: {

\’detail_lis\’: \’#chapter-list-1 li\’,

\’comic_title\’: \’.title h1\’,

},

# https://www.90ff.com/

\’90ff\’: {

\’detail_lis\’: \’#chapter-list-1 li\’,

\’comic_title\’: \’.book-title h1\’,

},

# https://www.36mh.com/

\’36mh\’: {

\’detail_lis\’: \’#chapter-list-4 li\’,

\’comic_title\’: \’.book-title h1\’,

},

# https://www.18comic.biz/

\’18comic\’: {

\’detail_lis\’: \’.btn-toolbar a\’,

\’comic_title\’: \’.panel-heading div\’,

\’comic_pages\’: \’.panel-body .row div\’,

},

# https://m.happymh.com/

\’happymh\’: {

\’comic_title\’: \’.mg-title\’,

\’comic_pages\’: \’.scan-list div\’,

},

}

detail_dicts = []

current_host_key = \’\’

@staticmethod

def _happymh(response, comic_title):

chapter_url = re.findall(\’"url":"(.*?)",\’, response.text)

chapter_name = re.findall(\’"chapterName":"(.*?)",\’, response.text)

for chapter in zip(chapter_name, chapter_url):

name, url = chapter

detail_dict = {

\’a_title\’: eval("\’" + name + "\’"),

\’a_href\’: url,

\’comic_title\’: comic_title,

}

Comic.detail_dicts.append(detail_dict)

return Comic.detail_dicts[::-1]

@staticmethod

def get_detail_dicts(url, host_url, host_key) -> list:

response = get_response(url)

pq = pyquery.PyQuery(response.text)

Comic.current_host_key = host_key

rule = Comic.rules_dict.get(host_key, \’\’)

if not rule: raise KeyError(\’该网站还没/有适配\’)

if Comic.current_host_key == \’happymh\’:

comic_title = pq(rule.get(\’comic_title\’)).text()

return Comic._happymh(response, comic_title)

def detail_one_page(detail_url):

response = get_response(detail_url)

pq = pyquery.PyQuery(response.text)

lis = pq(rule.get(\’detail_lis\’))

comic_title = pq(rule.get(\’comic_title\’)).text()

if Comic.current_host_key == \’18comic\’:

if not lis.length:

detail_dict = {

\’a_title\’: \’共一话\’,

\’a_href\’: host_url + pq(\’div.read-block a:first-child\’).attr(\’href\’).lstrip(\’/\’),

\’comic_title\’: comic_title,

}

Comic.detail_dicts.append(detail_dict)

return Comic.detail_dicts

else:

print(f\’该漫画共{len(lis)}章节\’)

for li in lis:

a_title = pyquery.PyQuery(li)(\’a\’).text()

a_href = pyquery.PyQuery(li)(\’a\’).attr(\’href\’)

for ch in r\’\\/:| <.・>?*"\’:

a_title = a_title.replace(ch, \’・\’) # 去除特殊字符

comic_title = comic_title.replace(ch, \’・\’) # 去除特殊字符

if Comic.current_host_key == \’dongmanmanhua\’:

a_title = a_title.split(\’・\’)[0]

detail_dict = {

\’a_title\’: a_title,

\’a_href\’: host_url + a_href.lstrip(\’/\’) if host_key not in a_href else a_href,

\’comic_title\’: comic_title,

}

Comic.detail_dicts.append(detail_dict)

detail_one_page(url)

# 处理特殊情况 pyquery 好像不支持nth-child(n+3)这种类型过滤

if Comic.current_host_key == \’hmba\’:

Comic.detail_dicts = Comic.detail_dicts[9:]

if Comic.current_host_key == \’dongmanmanhua\’:

total_pages = len(pq(\’.paginate a\’))

for i in range(2, total_pages + 1):

detail_one_page(url + f\’&page={i}\’)

Comic.detail_dicts.reverse()

return Comic.detail_dicts

# 该站点进行了分页处理, 需要特殊处理

@staticmethod

def _mm820(detail_url, pages: int):

images_url = []

for i in range(2, pages + 1):

response = get_response(detail_url + f\’?page={i}\’)

pq = pyquery.PyQuery(response.text)

divs = pq(Comic.rules_dict.get(Comic.current_host_key).get(\’comic_pages\’))

for div in divs:

img_src = pyquery.PyQuery(div)(\’img\’).attr(\’data-original\’)

if not img_src:

img_src = pyquery.PyQuery(div)(\’img\’).attr(\’src\’)

images_url.append(img_src)

return images_url

@staticmethod

def _cswhcs(pq):

def is_next_url():

next_url = \’\’

fanye = pq(\’div.fanye\’)

if \’下一页\’ in fanye.text():

next_url = pyquery.PyQuery(fanye)(\’a:nth-last-child(2)\’).attr(\’href\’)

if next_url:

next_url = \’https://cswhcs.com\’ + next_url

else:

next_url = None

return next_url

images_url = []

next_url = is_next_url()

while next_url:

print(next_url)

if next_url:

response = get_response(next_url)

pq = pyquery.PyQuery(response.text)

divs = pq(Comic.rules_dict.get(Comic.current_host_key).get(\’comic_pages\’))

for div in divs:

img_src = pyquery.PyQuery(div)(\’img\’).attr(\’data-original\’)

if not img_src:

img_src = pyquery.PyQuery(div)(\’img\’).attr(\’src\’)

images_url.append(img_src)

# 判断是否还有下一页

next_url = is_next_url()

return images_url

@staticmethod

def _dongmanmanhua(detail_url, ):

images_url = []

detail_url = \’https:\’ + detail_url

response = get_response(detail_url)

pq = pyquery.PyQuery(response.text)

imgs = pq(Comic.rules_dict.get(Comic.current_host_key).get(\’comic_pages\’))

for img in imgs:

image_url = pyquery.PyQuery(img).attr(\’data-url\’)

images_url.append(image_url)

return images_url

@staticmethod

def _nxueli(detail_url):

response = get_response(detail_url)

chapter_path_regix = \’chapterPath = "(.*?)"\’

chapter_images = eval(re.sub(r\’\\\\\’, \’\’, re.search(\’chapterImages = (\\[.*?\\])\’, response.text).group(1)))

if Comic.current_host_key == \’nxueli\’:

return [\’https://images.nxueli.com\’ + i for i in chapter_images]

elif Comic.current_host_key == \’90ff\’:

chapter_path = re.search(chapter_path_regix, response.text).group(1)

return [f\’http://90ff.bfdblg.com/{chapter_path}\’ + i for i in chapter_images]

elif Comic.current_host_key == \’mh1234\’:

chapter_path = re.search(chapter_path_regix, response.text).group(1)

return [f\’https://img.wszwhg.net/{chapter_path}\’ + i for i in chapter_images]

elif Comic.current_host_key == \’36mh\’:

chapter_path = re.search(chapter_path_regix, response.text).group(1)

return [f\’https://img001.pkqiyi.com/{chapter_path}\’ + i for i in chapter_images]

elif Comic.current_host_key == \’manhuaniu\’:

return [\’https://restp.dongqiniqin.com/\’ + i for i in chapter_images]

@staticmethod

def get_images_url(detail_dict: dict) -> dict:

images_url = []

nxueli_type = (\’nxueli\’, \’90ff\’, \’manhuaniu\’, \’36mh\’, \’mh1234\’)

cswhcs_type = (\’cswhcs\’, \’kanleying\’, \’qinqinmh\’)

mm820_type = (\’mm820\’, \’hanmzj\’)

detail_url = detail_dict.get(\’a_href\’)

a_title = detail_dict.get(\’a_title\’)

comic_title = detail_dict.get(\’comic_title\’)

if Comic.current_host_key == \’dongmanmanhua\’:

images_url = Comic._dongmanmanhua(detail_url)

return {\’images_url\’: images_url, \’a_title\’: a_title, \’comic_title\’: comic_title}

elif Comic.current_host_key in nxueli_type:

images_url = Comic._nxueli(detail_url)

return {\’images_url\’: images_url, \’a_title\’: a_title, \’comic_title\’: comic_title}

elif Comic.current_host_key == \’happymh\’:

response = get_response(detail_url)

imgs_url = eval(re.search(\’var scans = (.*?);\’, response.text).group(1))

imgs_url = [i for i in imgs_url if isinstance(i, dict)]

for img_url in imgs_url:

img_src = img_url[\’url\’]

images_url.append(img_src)

return {\’images_url\’: images_url, \’a_title\’: a_title, \’comic_title\’: comic_title}

response = get_response(detail_url)

pq = pyquery.PyQuery(response.text)

divs = pq(Comic.rules_dict.get(Comic.current_host_key).get(\’comic_pages\’))

for div in divs:

img_src = pyquery.PyQuery(div)(\’img\’).attr(\’data-original\’)

if not img_src:

img_src = pyquery.PyQuery(div)(\’img\’).attr(\’src\’)

images_url.append(img_src)

# 处理特殊情况

if Comic.current_host_key in cswhcs_type:

images_url.extend(Comic._cswhcs(pq))

if Comic.current_host_key in mm820_type:

# 获取分页数

pages = len(pq(\’.selectpage option\’))

images_url.extend(Comic._mm820(detail_url, pages))

if Comic.current_host_key == \’18comic\’:

images_url = [img for img in images_url if img]

return {\’images_url\’: images_url, \’a_title\’: a_title, \’comic_title\’: comic_title}

@staticmethod

def download_images(images_dict):

images_url = images_dict.get(\’images_url\’)

a_title = images_dict.get(\’a_title\’).strip(\’ \’)

comic_title = images_dict.get(\’comic_title\’).strip(\’ \’)

print(f\’开始下载{comic_title}-{a_title}\\n\’)

file_path = f\’./{os.path.basename(__file__).strip(".py")}/{comic_title}/{a_title}/\’

if not os.path.exists(file_path):

os.makedirs(file_path)

download(images_url, file_path, 10)

suffix = [\’jpg\’, \’png\’, \’gif\’, \’jpeg\’]

file_list = sorted(

[file_path + str(imgFileName) for imgFileName in os.listdir(file_path) if

imgFileName.endswith(tuple(suffix))],

key=lambda x: int(os.path.basename(x).split(\’.\’)[0])

)

file_name = f\'{file_path}/{comic_title}-{a_title}\’

print(f\’开始生成html{comic_title}-{a_title}\\n\’)

Comic.render_to_html(f\'{file_name}.html\’, a_title,

[str(x) + \’.jpg\’ for x in range(len(file_list))])

print(f\’开始生成PDF{comic_title}-{a_title}\\n\’)

Comic.make_pdf(f\'{file_name}.pdf\’, file_list)

comment = {

\’Website\’: \’https://amd794.com\’,

\’Password_1\’: \’百度云\’,

\’Password_2\’: f\'{comic_title}-{a_title}\’,

}

with open(f\'{file_path}/Password.txt\’, \’w\’, encoding=\’utf-8\’) as f:

f.write(str(comment))

print(f\’开始压缩文件{comic_title}-{a_title}\\n\’)

Comic.compress(f\'{file_name}.rar\’, f\'{file_path}/*\’, f\'{comic_title}-{a_title}\’)

def main():

url = input(\’漫画地址:\’).strip()

try:

host_key = re.match(\’https?://\\w+\\.(.*?)\\.\\w+/\’, url).group(1) # kanleying

host_url = re.match(\’https?://\\w+\\.(.*?)\\.\\w+/\’, url).group() # https://www.kanleying.com/

except AttributeError:

host_key = re.match(\’https?://(.*?)\\.\\w+/\’, url).group(1) # kanleying

host_url = re.match(\’https?://(.*?)\\.\\w+/\’, url).group() # https://kanleying.com/

print(host_url, host_key)

detail_dicts = Comic.get_detail_dicts(url, host_url, host_key)

while True:

# pprint(enumerate(detail_dicts, 1))

index = input(\’>>>:\’)

# 下载某章节之后所有章节

if \’>\’ in index:

index = index.split(\’>\’)[-1]

index = int(index)

# pprint(detail_dicts[index:])

for detail_dict in detail_dicts[index:]:

images_url = Comic.get_images_url(detail_dict)

Comic.download_images(images_url)

continue

# 下载全部

elif index == \’0\’:

for detail_dict in detail_dicts:

images_url = Comic.get_images_url(detail_dict)

Comic.download_images(images_url)

continue

# 下载最新章节

elif not index:

detail_dict = detail_dicts.pop()

images_url = Comic.get_images_url(detail_dict)

Comic.download_images(images_url)

continue

# 下载某几章节 –> 2 6 9

indexes = list(map(int, index.split(\’ \’)))

pprint(indexes)

for i in indexes:

detail_dict = detail_dicts[i – 1]

images_url = Comic.get_images_url(detail_dict)

Comic.download_images(images_url)

if __name__ == \’__main__\’:

main()

threading_download_images.py

[Python] 纯文本查看 复制代码# !/usr/bin/python3

# -*- coding: utf-8 -*-

# Time : 2020/3/28 19:22

# Author : Amd794

# Email : [url=mailto:2952277346@qq.com]2952277346@qq.com[/url]

# Github : https://github.com/Amd794

import os

import threading

import requests

from fake_useragent import UserAgent

requests.packages.urllib3.disable_warnings()

# todo 获取返回的response

def get_response(url, error_file_path=\’.\’, max_count=3, timeout=30, ua_type=\’random\’, name=\’\’, ):

ua = UserAgent()

header = {

\’User-Agent\’: getattr(ua, ua_type),

# \’User-Agent\’: \’Mozilla/5.0 (Linux; Android 6.0; Nexus 5 Build/MRA58N) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/83.0.4103.61 Mobile Safari/537.36\’,

\’referer\’: url,

}

count = 0

while count < max_count:

try:

response = requests.get(url=url, headers=header, verify=False, timeout=timeout)

response.raise_for_status() # 如果status_code不是200,产生异常requests.HTTPError

response.encoding = \’utf-8\’

return response

except requests.exceptions.RequestException:

print(f\’\\033[22;33;m{url} {name}连接超时, 正在进行第{count + 1}次连接重试, {timeout}秒超时重连\\033[m\’)

count += 1

else:

print(f\’\\033[22;31;m{url}重试{max_count}次后依然连接失败, 放弃连接…\\033[m\’)

if not os.path.exists(error_file_path):

os.makedirs(error_file_path)

with open(os.path.join(error_file_path, \’error_urls.txt\’), \’a\’) as f:

f.write(url + \’ \’ + name + \’\\n\’)

return None

# todo 多线程

def thread_run(threads_num, target, args: tuple):

threads = []

for _ in range(threads_num):

t = threading.Thread(target=target, args=args)

t.start()

threads.append(t)

for t in threads:

t.join()

# todo 下载图片

def download_images(file_path, images: list):

from PIL import Image, ImageFont, ImageDraw

def create_img(text, img_save_path):

font_size = 24

liens = text.split(\’\\n\’)

im = Image.new("RGB", (len(text) * 12, len(liens) * (font_size + 5)), \’#fff\’)

dr = ImageDraw.Draw(im)

font_path = r"C:\\Windows\\Fonts\\STKAITI.TTF"

font = ImageFont.truetype(font_path, font_size)

dr.text((0, 0), text, font=font, fill="blue")

im.save(img_save_path)

while images:

glock = threading.Lock()

glock.acquire()

image_url = images.pop()

file_name = str(len(images)) + \’.jpg\’

print(f\'{threading.current_thread()}:正在下载{file_name} —> {image_url}\’)

glock.release()

response = get_response(image_url, file_path, name=file_name)

if not os.path.exists(file_path):

os.makedirs(file_path)

with open(os.path.join(file_path, file_name), \’wb\’) as f:

if response:

f.write(response.content)

else:

f.close()

create_img(f\’温馨提示{image_url}已经失效\’, os.path.join(file_path, file_name))

if \’error_urls.txt\’ in os.listdir(file_path):

from shutil import copyfile

copyfile(\’./try_to_fix.py\’, os.path.join(file_path, \’try_to_fix.py\’))

def download(image_list: list, file_path=os.getcwd(), threads_num=5):

thread_run(threads_num, download_images, (file_path, image_list,))

if __name__ == \’__main__\’:

imgs = [\’h15719904026351411247.jpg?x-oss-process=image/quality,q_90\’,

\’https://cdn.dongmanmanhua.cn/15719904028051411242.jpg?x-oss-process=image/quality,q_90\’,

\’https://cdn.dongmanmanhua.cn/15719904029381411248.jpg?x-oss-process=image/quality,q_90\’,]

download(imgs, f\’./{os.path.basename(__file__).strip(".py")}/test/\’)

暂时是这样, 如果还有啥补充的想到再说. 如果你喜欢不妨点个赞, 如果你有好的网站的记得分享下俺

最后分享个成品:

链接:https://pan.baidu.com/s/1377l5KgQIe5u_l79QXejSw

提取码:8bzp

1. 本站所有资源来源于用户上传和网络,如有侵权请邮件联系站长!603313839@qq.com

2. 请您必须在下载后的24个小时之内,从您的电脑中彻底删除上述内容资源

3. 本站资源售价只是赞助,收取费用仅维持本站的日常运营所需!

4. 不保证所提供下载的资源的准确性、安全性和完整性,源码仅供下载学习之用!

5. 不保证所有资源都完整可用,不排除存在BUG或残缺的可能,由于资源的特殊性,下载后不支持退款。

6. 站点所有资源仅供学习交流使用,切勿用于商业或者非法用途,与本站无关,一切后果请用户自负!